توضیحات

ABSTRACT

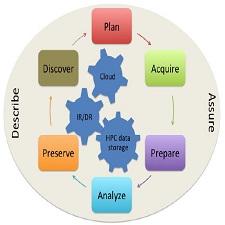

As science becomes more data-intensive and collaborative, researchers increasingly use larger and more complex data to answer research questions. The capacity of storage infrastructure, the increased sophistication and deployment of sensors, the ubiquitous availability of computer clusters, the development of new analysis techniques, and larger collaborations allow researchers to address grand societal challenges in a way that is unprecedented. In parallel, research data repositories have been built to host research data in response to the requirements of sponsors that research data be publicly available. Libraries are re-inventing themselves to respond to a growing demand to manage, store, curate and preserve the data produced in the course of publicly funded research. As librarians and data managers are developing the tools and knowledge they need to meet these new expectations, they inevitably encounter conversations around Big Data. This paper explores definitions of Big Data that have coalesced in the last decade around four commonly mentioned characteristics: volume, variety, velocity, and veracity. We highlight the issues associated with each characteristic, particularly their impact on data management and curation. We use the methodological framework of the data life cycle model, assessing two models developed in the context of Big Data projects and find them lacking. We propose a Big Data life cycle model that includes activities focused on Big Data and more closely integrates curation with the research life cycle. These activities include planning, acquiring, preparing, analyzing, preserving, and discovering, with describing the data and assuring quality being an integral part of each activity. We discuss the relationship between institutional data curation repositories and new long-term data resources associated with high performance computing centers, and reproducibility in computational science. We apply this model by mapping the four characteristics of Big Data outlined above to each of the activities in the model. This mapping produces a set of questions that practitioners should be asking in a Big Data project.

INTRODUCTION

As science becomes more data-intensive and collaborative, researchers increasingly use larger and more complex data to answer research questions. The capacity of storage infrastructure, the increased sophistication and deployment of sensors, the ubiquitous availability of computer clusters, the development of new analysis techniques, and larger collaborations allow researchers to address “grand challenges”1 in a way that is unprecedented. Examples of such challenges include the impact of climate change on regional agriculture and food supplies, the need for reliable and sustainable sources of energy, and the development of innovative methods for the treatment and prevention of infectious diseases. Multi-disciplinary, sometimes international teams meet these challenges and countless others by collecting, generating, cross-referencing, analysing, and exchanging datasets in order to produce technologies and solutions to the problems. These advancements in science are enabled by Big Data, recently defined as a cultural, technological, and scholarly phenomenon (Boyd and Crawford, 2012).

Year : 2015

Publisher : IJDC

By : Line Pouchard

File Information : English Language /17 Page /Size : 288 K

Download : click

سال : 2015

ناشر : IJDC

کاری از : Line Pouchard

اطلاعات فایل : زبان انگلیسی /17 صفحه /حجم : 288 K

لینک دانلود : روی همین لینک کلیک کنید

نقد و بررسیها

هنوز بررسیای ثبت نشده است.